Open Position - Internship (Lighting Design)

“Can You Manipulate Light the Way You Imagine It?”: Exploring Interfaces for Exhibition Lighting Design

Keywords

Interaction Design, Participatory Design, Lighting Design, Generative AI, Human-AI collaboration

Project Surpervisors

Francesco Dettori, Christian Sandor, Huyen Nguyen.

Emails: francesco.dettori@universite-paris-saclay.fr, christian.sandor@universite-paris-saclay.fr, thi-thuong-huyen.nguyen@universite-paris-saclay.fr

Description

This internship is part of MuseoXR, a CNRS-funded project that investigates new tools for collaborative exhibition lighting design. A preliminary study with curators, scenographers, and lighting designers revealed three recurring challenges:

- Different ways of describing light: Professionals from different fields use distinct vocabularies, narrative, perceptual, or technical, making it hard to align creative and practical decisions.

- Design and tool desynchronization: Designs evolve so rapidly that models, photometric simulations, and pre-visualizations often fall out of sync. As a result, these tools are rarely used in practice despite their potential value.

- Manual trial-and-error: To translate a creative vision into a real lighting setup, designers must manually browse and test hundreds of technical files.

Existing tools like DIALux enable precise photometric simulation but are too slow and rigid for early creative exploration. AI-based tools can manipulate lighting in images, but still lack the precision and controllability that professionals require. Recent advances in generative AI make lighting control directly in 2D images possible [Magar, 2025; Careaga, 2025], while new rendering methods now support accurate simulation of real luminaires using IES data [Gadia, 2024]. These technologies establish a strong foundation, but the interface challenge remains.

This raises a key HCI question: How can we design an interface that allows users with different expertise to communicate and manipulate lighting ideas naturally, and in a way that can be translated into measurable photometric data?

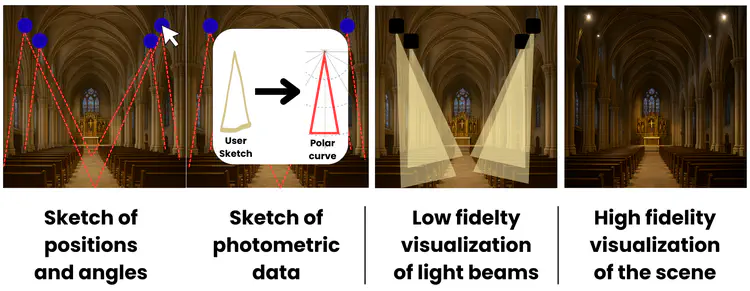

At the ARAI team, we are developing a system that reconstructs lighting configurations from sketches, descriptions, or photos - the image below illustrates the concept.

Preliminary observations show that non-experts draw fan shapes, professionals use cones and numeric parameters, and curators describe effects through words like “glowing” or “alive.” This diversity reveals a gap between how people think about light and how existing systems represent it.

This internship focuses on the design and evaluation of interfaces that help users express lighting ideas across backgrounds and expertise levels.

The intern will:

- Conduct participatory and comparative design sessions with both professionals and non-experts. These sessions will include collective workshops to observe how ideas are negotiated, and individual tasks to explore personal strategies.

- Analyze the ways people use drawing, speech, gestures, and image references to describe lighting conditions or desired effects.

- Develop high-fidelity prototypes of sketch-based, text-based, or combined interfaces that allow users to express lighting without working in 3D.

- Iterate and evaluate the prototypes through user testing, assessing clarity, control, and how effectively the system can convert input into structured lighting data.

The final prototype will serve as a “front-end” to our AI system, acting as a shared language between users and models, one that can transform abstract ideas into photometrically accurate and physically realizable lighting configurations. It will contribute directly to faster, more accessible lighting workflows for museum design teams.

Relevant skills to have:

- Solid foundation in Human-Computer Interaction and user-centered design.

- Experience with interface prototyping (Figma, Adobe XD, or code-based).

- Interest in participatory design and qualitative methods.

- Familiarity with generative AI for images or visual computing is a plus.

- Good communication skills in English.

Expected outcome:

To be defined in collaboration with the team. Likely deliverables include a structured design study, interface prototypes, and guidelines for intuitive lighting manipulation tools integrated into the MuseoXR platform.

Apply

To express your interest, please send your application materials to Francesco Dettori, cc Huyen Nguyen, Christian Sandor:

- CV

- Transcript of Records

- Link to portfolio (github, personal homepage, etc.)

References

[Careaga, 2025] Careaga, C. and Aksoy, Y. (2025). Physically Controllable Relighting of Photographs. SIGGRAPH Conference Papers, 1–10.

[Gadia, 2024] Gadia, D., Lombardo, V., Maggiorini, D., Natilla, A. (2024). Implementing many-lights rendering with IES-based lights. Applied Sciences, 14(3), p.1022.

[Magar, 2025] Magar, N., Hertz, A., Tabellion, E., Pritch, Y., Rav-Acha, A., Shamir, A., Hoshen, Y. (2025). LightLab: Controlling light sources in images with diffusion models. SIGGRAPH Conference Papers, 1–11.